Methods of Web Application Finger Printing

Historically Identification of Open Source applications have been easier as the behavior pattern and all the source codes are publically open. In the early days web application identification was as simple as looking in the footer of the Page of text like �Powered by <XYZ>�. However as more and more Server admin became aware of this simple stuff so is the Pen Testers approach became more complex towards identification of web application running on remote machine.

HTML Data Inspection

This is the simplest method in which manual approach is to open the site on browser and look at its source code, similarly on automated manner your tool will connect to site, download the page and then will run some basic regular expression patterns which can give you the results in yes or no. Basically what we are looking for is unique pattern specific to web software. Examples of such patterns are

1) Wordpress

Meta Tag Folder Names

Folder Names in Link section

Ever green notice at the bottom

2) OWA

URL pattern

http://<site_name>/OWA/

3) Joomla

URL pattern: http://<site_name>/component/

4) SharePoint Portal

URL Pattern: /_layouts/* And similarly for majority of applications we can create regular expression rules to identify them.

These regular expression�s combined together as a monolithic tool to identify all in one go or as a pluggable architecture for creating one pattern file for each type and work on it. Example of tools using this technique includes browser plugin�s like Wapplyzer and web technology finder and similar tools.

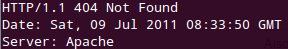

File and Folder Presence (HTTP response codes)

This approach doesn�t download the page however it starts looking for obvious trails of an application by directly hitting the URL and in course identifying found and not found application list. In starting days of internet this was easy, just download headers and see if it�s 200 OK or 404 not found and you are done.

However in current scenario, people have been putting up custom 404 Pages and are actually sending 200 OK in case the page is not found. This complicates the efforts and hence the new approach is as follows.

1) Download default page 200 OK.

2) Download a file which is guaranteed to be non-existing then mark it as a template for 404 and then proceed with detection logic.

Based on this assumption and knowledge this kind of tools start looking for known files and folders on a website and try to determine the exact application name and version. Example of such scenario would be wp-login.php => wordpress /owa/ => Microsoft outlook web frontend.

Checksum Based identification

This is relatively a newer approach considered by far as most accurate approach in terms on application and specific version identification. This Technique basically works on below pattern.

1) Create checksum local file and store in DB

2) Download static file from remote server

3) Create checksum

4) Compare with checksum stored in db and identified

Disadvantages of Current automated Solutions

1) First and foremost these tools get noisy especially in auto detection modes.

2) Large numbers of 404�s can immediately trigger alarms across the places.

3) Secondly they generally rely on the URL pattern we gave and fail to look beyond that. However it might be the case that site main link has reference links to its blog which might not be updated and could open gates for us.

4) They lack the humanly fuzziness.

0 nhận xét:

Đăng nhận xét